skip to main |

skip to sidebar

I was inspired by this question on OTN forum http://forums.oracle.com/forums/thread.jspa?threadID=930469&tstart=15 and want to resolve this in Administrator by using one of data moddeling techniques.

To repeat, the problem was how we can put 2 parameters to the user (month one, month two) so after selecting values from them the report need to be like this:

Name Month1 Month2 Delta

Mark 6 4 -2

Paul 7 8 +1

Ken 10 2 -8

while we have, of course, detailed data in table.

Now, to show the solution how to resolve this in Administrator I'll use only TIMES, PRODUCTS and SALES table from SH user.

The first step is to create aliases for TIMES table in the physical layer. Why? Because we need to have two TIMES logical tables, each one is filtered by one parameter (dashboard prompts, month one and month two). We create alias for SALES table to, to add second logical table source into SALES logical fact table in the BMM to allow OBIEE server to generate second query for second filter.

This is our physical diagram:

We add PRODUCTS table as this is required for joining these two fact tables, because we will retrieve information from both fact tables (both measures) and for group by we use only PRODUCTS attributes.

Now, after we implement our model in the BMM with complex joins and three separate logical dimensions (TIMES, TIMES2 and PRODUCTS) and one logical fact table (SALES) we put two logical table sources inside it (SALES and SALES2) and each has own measures (AMOUNT_SOLD1 and AMOUNT_SOLD2). With this model OBIEE server will generate two queries, one is SALES-TIMES and the second is SALES2-TIMES2. Each combination is for one value from the filter (dashboard prompt, presentation variable, etc).

Logical diagram with model:

AMOUNT1_AMOUNT2_DIFF logical column:

You can just put ABS(SALES.AMOUNT_SOLD1-SALES.AMOUNT_SOLD2).

Add tables to the presentation area:

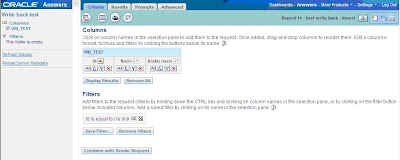

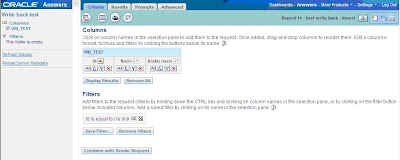

Make two dashboard prompts for each month. Can be with TIMES.CALENDAR_MONTH_DESC and TIMES2.CALENDAR_MONTH_DESC in the column editor and with these columns set to IS PROMPTED in the report filter.

Or can be like this. In this case you need to use presentation variables in the report filter.

Report:

or, if you use presentation variables:

Result:

NQQuery.log:

*Note

If you drop PRODUCTS.PROD_CATEGORY column OBIEE cannot join these two measures no mather if you include different dimensions (TIMES, TIMES2) in the filter and we get an error: The query does not reference any tables.

We could get the same report at the beginning of this post, without our new data model (TIMES-SALES and TIMES2-SALES2), with just using TIMES-SALES, and this we only aply in Answers:

PRODUCTS:PROD_CATEGORY

FILTER(SALES.AMOUNT_SOLD1 USING (TIMES.CALENDAR_MONTH_DESC = '@{pv_month_one}'))

FILTER(SALES.AMOUNT_SOLD1 USING (TIMES.CALENDAR_MONTH_DESC = '@{pv_month_two}'))

ABS

(

FILTER(SALES.AMOUNT_SOLD1 USING (TIMES.CALENDAR_MONTH_DESC = '@{pv_month_one}'))

-

FILTER(SALES.AMOUNT_SOLD1 USING (TIMES.CALENDAR_MONTH_DESC = '@{pv_month_two}'))

)

The result is the same:

The NQQuery.log is slightly different:

FILTER USING is also a gut solution.

If you read my post External table authentication and row-wise initialization in OBIEE ,

in Part1 - External table authentication I'm using external table for authentication of the user, group, displayname and loglevel.

I'm using b_ext_tab_auth_all block:

select username, groupname, displayname, loglevel

from HR.OBIEE_EXT_TAB_AUTH

where username=':USER'

and password=':PASSWORD'

The table OBIEE_EXT_TAB_AUTH has loglevel column number datatype in the database and when you import it to a repository it has double datatype:

Logon with some user (UserC, which has loglevel 5 in the database) and make a simple request and see NQQuery.log.

You'll see that the log in the NQQuery.log does not exist.

Try to put first in the column in the report VALUEOF(NQ_SESSION.LOGLEVEL) to see what is populated:

Ok, this is because decimal is populated and LOGLEVEL need to have integer as input to work properly.

Solution:

Leave datatype of imported table to DOUBLE and do a explicit TO_NUMBER(loglevel) conversion:

select username, groupname, displayname, to_number(loglevel)

from HR.OBIEE_EXT_TAB_AUTH

where username=':USER'

and password=':PASSWORD'

Now it works and you'll get log in the NQQuery.log.

*Note

If you just change datatype of imported table from DOUBLE to INT and leave block without change:

select username, groupname, displayname, loglevel

from HR.OBIEE_EXT_TAB_AUTH

where username=':USER'

and password=':PASSWORD'

it does not work, you can't see logs in NQQuery.log.

If you are using character for LOGLEVEL column in the database then you need to put TO_NUMBER(loglevel) also.

Here is my solution for write back (insert and update). I'm just trying to do some table data maintenance, and that is, insert and update one after the other. This solution includes two reports (one for insert and one for update, and two XML files). It's very fast to insert data, or update.

1. At first, we create table in the database:

create table wb_test(col1 number, col2 varchar2(50), col3 varchar2(255))

alter table wb_test add constraint wb_test_pk primary key (col1)

create sequence wb_test_seq start with 1 increment by 1

2. In Administrator, we need to disable cacheable option:

3. In Answers the user must have write back privilegies

4. In this write back insert/update solution user inserts new data through the one report and through the other report is doing update. Both reports are for the same database table. Write back option is taking XML file that we put in ..\OracleBI\web\msgdb\customMessages folder. Inside this XML there are SQL's for insert and/or update.

5. Insert report

Here is Id=0 in the filter. What does it mean? This insert report is taking only rows that have Id=0. So, for 'insert new row' we'll update this Id from 0 to some sequence value. You'll see later that we put update statement in insert XML. This dummy row is a necessary to be in the table, before (when the table is empty) and after we update (this is resolved by a after update trigger) because the table has the primary key (id), otherwise we have problems.

OBIEE has some problems with null values in the database. In this case we have 0 – null – null and we need to put in the col2 and col3 columns expression ifnull(WB_TEST.COL2,'n/a') and ifnull(WB_TEST.COL3,'n/a') to get null value from database and represents it as n/a.

Without this workaround if we try to insert something (test) we would have:

and we get an error:

We can ignore/fix this error, with ifnull(WB_TEST.COL2,'n/a') in the col2 and ifnull(WB_TEST.COL3,'n/a') in the col3. Try it.

Then we get:

N/a is null, so if we delete n/a from both columns we are saving null in the database with new inserted Id, and this works fine, like in case if we put some value:

After successful insert the next thing that we see is again dummy Id=0 row. This means that OBIEE saved our insert data in the database (mmarkic) i automatically give us dummy Id=o row. If we don't want to insert we close the insert report. After the user push insert button, XML code is generated.

Insert XML:

After insert the state in the database is:

We see new inserted row with Id 34, and a new dummy row appeared.

6. Update report

In this update report we show only inserted rows, with Id that is greater then zero (Id>0). We can update them. In case we don't have inserted rows this report would return no results.

In our case we have one row to update..

We have changed value from mmarkic u mmarkic2:

After insert we see in the report new data and the state in the database is:

Update XML:

XML files location:

We set writeback template here:

7. Database trigger:

create or replace trigger wb_test_af_upd_tr

after update

ON wb_test

declare

cursor c1

is select 1 as num

from wb_test

where col1=0;

v1 number;

begin

open c1;

fetch c1 into v1;

if v1=1 then

null;

else

insert into wb_test values (0,null,null);

end if;

end loop;

close c1;

exception

when others then

null;

end;

*Note

We use dummy row insert method.

After we insert row (we update dummy row) this new row we don't see in the update report because of Id=0 filter.

After update of dummy row we activate trigger in wb_test table which inserts then again this dummy Id=0 row if the one does not exist.

The trigger will be activated during regular update.

Update is only for rows that have Id greater then zero

We use three tables and each of them has different measure on a different dimension aggregation level. These measures contain different information so we need it all.

SH.SALES -> contains detail data about amount and quantity sold.

SH.QUANTITY_QUOTAS -> data about quantity quotas on category and month level:

create table quantity_quotas

as select sum(quantity_sold)+10 as quota, p.prod_category_id, t.calendar_month_id

from sales s, times t, products p

where s.time_id=t.time_id

and p.prod_id=s.prod_id

group by p.prod_category_id, t.calendar_month_id

HR.ANNUAL_SALES_PLANNED -> on year level.

create table annual_sales_planned as

select sum(amount_sold)*108 as annual_sales_planned, t.calendar_year_id

from sales s, times t

where s.time_id=t.time_id

group by t.calendar_year_id

Now we want to include all these threee tables (measures) in one logical fact table.

My connection pool is set to HR so I need to do:

create or replace public synonym quantity_quotas for sh.quantity_quotas

and

grant select on quantity_quotas to hr

We created manually separate higher level dimension tables that we used in QUANTITY_QUOTAS and ANNUAL_SALES_PLANNED fact tables:

CATEGORY -> select distinct PROD_CATEGORY_ID, PROD_CATEGORY from times

MONTHS -> select distinct CALENDAR_MONTH_ID, CALENDAR_MONTH_DESC, CALENDAR_YEAR_ID, CALENDAR_YEAR from times

YEARS -> select distinct CALENDAR_YEAR_ID, CALENDAR_YEAR from times

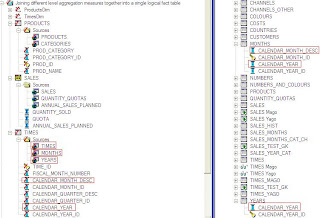

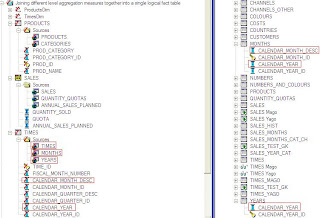

Physical diagram:

Drag and drop PROD_CATEGORY from CATEGORY table in physical layer to PROD_CATEGORY column in PRODUCTS logical table in BMM. New logical table source appears:

Note that there ae two sources for PROD_CATEGORY, this is required to generate separate SQL queries, one for SALES fact table and one for QUANTITY_QUOTAS table.

Repeat this step for TIMES logical table, drag and drop columns CALENDAR_MONTHS_DESC and CALENDAR_YEAR from MONTHS table and CALENDAR_YEAR from YEAR table in physical layer into same column names:

There are two sources for CALENDAR_MONTH_DESC and three sources for CALENDAR_YEAR:

Now drag and drop measures from fact tables from physical layer, drop it to on the top of the SALES logical table so that new logical table source and new measure appeared:

We don't need to setup for logical table sources QUANTITY_QUOTAS and ANNUAL_SALES_PLANNED aggregation content group by to be in certain logical dimension level:

This works without that. How we know this?

I introduce a similar model diagram in one of my previous post about time series modelling

http://108obiee.blogspot.com/2009/01/time-series-modelling.html.

I explained situation when OBIEE generates more than one queries, in this case three separate queries will be generated if we choose for example column CALENDAR_YEAR, because it has three dimension logical table sources and according to our physical model diagram each of them (MONTHS, YEARS and TIMES) is joined to a different measure in different fact tables (SALES, ANNUAL_SALES_PLANNED and QUANTITY_QUOTAS). So we see only three queries in NQQuery.log OBIEE join them with group by column that is common to all three queries.

Test:

We see that all three tables can summarize data on year level.

Now if we group by only by CALENDAR_MONTH_DESC:

Only first two queries are populated. The third one (ANNUAL_SALES_PLANNED fact table) is not because it contains data on year lever only, not lower. So null value is populated for ANNUAL_SALES_PLANNED measure.